How to Fix: Testing is Falling Behind Causing Production Bugs.

As a Scrum Master how can you help enforce "Done" and turn tests into a shared, sprint-bound responsibility

Hello 👋, It’s Vibhor. Welcome to the 🔥 paid member only🔥 edition of Winning Strategy: A newsletter focused on enhancing product, process, team, and career performance. Many subscribers expense this newsletter to their Learning & Development budget. Here’s an expense template to send to your manager.

Hi Vibhor, our DoD says every story must include automated tests, yet during the sprint, the testers are overloaded and devs move tasks to ‘Done’ without all test cases completed. This backlog of missing tests keeps growing. How can I reinforce the Definition of Done and help the team build a sustainable approach to shared quality ownership so unfinished testing no longer leaks past the sprint?

Thank you for the question.

We have all been there before.

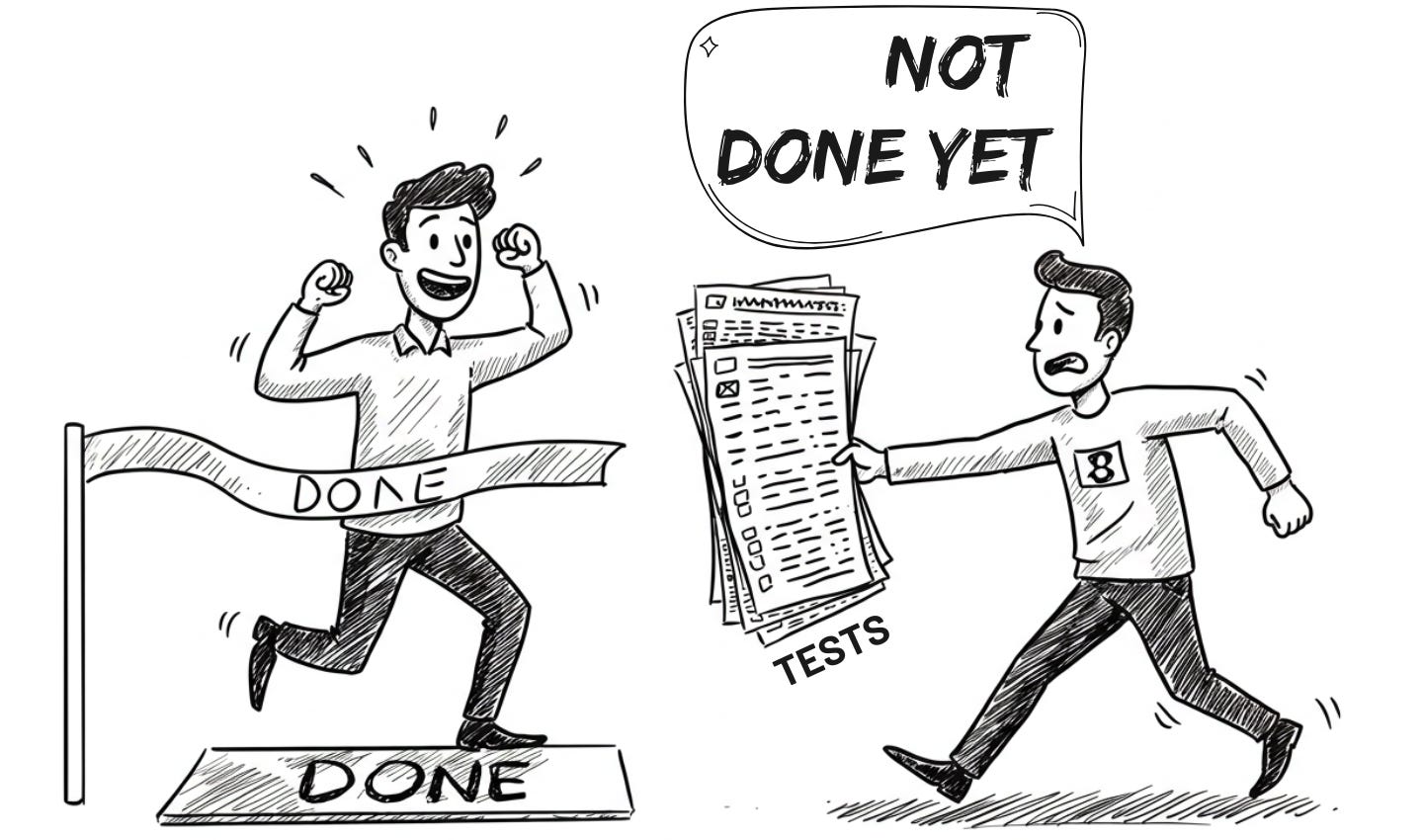

The sprint ends, but testing hasn’t finished yet. Developers move user stories to “Done,” testers drown in unfinished test cases, and production bugs continue to pile up.

Your Definition of Done says every story must include automated tests, but let’s be honest, when the team is overwhelmed, the DoD feels like a suggestion, not a rule.

What to do?

In this “Coach & Fix” post, I will walk you through the process I would follow to fix this issue.

Let’s get started.

Got an urgent question?

Get a quick answer by joining the subscriber chat below.

First thing first: Let’s not try to fix something that isn’t broken.

Before jumping into solutions (for any problem), it is always a good idea to check whether the problem in question is truly a problem or just a perceived inconvenience.

Maybe you don't need to fix this right now!

If your team is delivering value, your stakeholders are happy, then… take a breath.

Not every issue in the process needs an immediate fix.

You can do the following:

Keep a risk backlog (which I see you’re already doing). Track the stories that are going to production without complete test coverage. This will provide you with some data (not gut feelings) about the scope of the problem

Bring this topic to the team’s attention during retro. Is the risk from missing tests increasing? Are there production bugs? Are testers or devs stretched?

Work on a solution only when you see a rising risk. Don’t add process for process’s sake.

Having said that, what if there are actual risks involved?

If that’s the case, I would start by asking the following questions.

Question #1:

Since some stories are leaving the sprint without their automated tests, what concrete issues have we actually experienced so far? Have we had production bugs that automation would have caught, hot-fixes or rollbacks, stakeholder complaints. Or has everything continued to run smoothly despite the growing test backlog?

The answer will show whether missing tests are already impacting your team or are still just a potential risk. This will guide how urgently you should allocate sprint capacity to clear the automation backlog.

Let’s say:

Some production bugs were caused by areas that had no test coverage.

Moving on to the next question.

Question #2:

How is automated-test work currently divided between testers and developers during the sprint. Do developers jump in when testers are overloaded, or is test automation viewed as “tester work?”

The answer will show whether we can unlock additional capacity for testing work by involving developers and what specific blocks must be addressed to make that possible.

Let’s say:

Developers sometimes help.

Next question.

Question #3:

When a story is shown in Sprint Review, does the PO verify this against the Definition of Done? If tests are missing, is the story sent back to “In Progress?”

The answer will show whether the Definition of Done truly blocks incomplete stories or (silently) lets test debt through.

Let’s say:

The PO accepts the story if the feature works in the demo.

Next…

Question #4:

You’ve mentioned that the backlog of missing tests is growing. About how many story-points or person-days of test work are currently outstanding? Is it trending up, flat, or down each sprint? Where is this work tracked (separate backlog items or nowhere), and does the team or PO actually feel pain from carrying it?

This will put some data on the quality debt. You will know whether the backlog is a minor issue or an increasing risk that deserves urgent capacity and visible tracking.

Let’s say:

For example, 15 stories still need tests. The team adds 2–3 more each sprint, so it keeps growing.