Problem Solving and Reporting to Stakeholders

The simplest problem solving technique that works every time.

👋 Hello,

Welcome to the ⭐️ free monthly edition ⭐️ of my weekly newsletter, the “Winning Strategy.” Every week, I answer one reader question about agile, product, roles, skills, job interviews, frameworks, working with humans, and anything else that you need answered about your career growth. You can send me your questions here.

If you are new to Winning Strategy, here’s what you have missed last month:

Note: If you like what you read and you feel like making someone’s day then please press the grey heart below the title above. Researching these topics takes a really long time and little gestures like these relieve all the stress.

On to this week’s question!

Q: Hey Vibhor. Since I took over as the Scrum Master at this company, we've been constantly thrown off track by these urgent customer issues during our sprints. It's causing delays, we are missing goals, and the team's getting frustrated. I'm struggling with how to communicate this effectively to our stakeholders. Do you have any approach or technique in mind that could help me navigate and possibly resolve this? Any help is appreciated.

Absolutely and thanks for the question.

I understand how challenging it can be to manage such disruptions, especially when you're trying to keep stakeholders calm and informed and also trying to maintain team morale.

Your setting, and the situation you’re in, remind me of a true story I read in a newspaper on my way to Boston for a lecture at Harvard Business School way back in 2018.

The Leaky Faucet

The story talked about a homeowner who was grappling with a persistently leaky faucet.

At first, the homeowner would simply mop up the water. Then he placed a bucket underneath, thinking it was a temporary issue. But over time, as the water started damaging the floor under the sink and causing other problems, he realized he had to address the “root cause.” Instead of continuously dealing with the mess, he traced the leak back to a faulty valve deep inside the wall.

This story about the leaky faucet is a lot like the challenges we often face in projects or teams, especially in Agile and Scrum environments.

It's easy to fix the symptoms, like the dripping water (delays) or damaged floor (missed sprint goals), but if we don't address the root cause - the faulty faucet (mid-sprint interruptions) - we'll be stuck in an endless cycle of temporary solutions.

Drawing a parallel to your situation, it seems like you're stuck in a cycle of constantly emptying a bucket under a dripping faucet. It's tiring, repetitive, and doesn't truly solve anything.

What we need is a systemic approach that can help us identify the root cause of the delays in a way that is easy to explain to non-tech people (stakeholders) and can lead us to find a unanimous and lasting solution. And this is where a certain technique, known simply as A3 Problem Solving, comes into play.

As a Scrum Master, you're already familiar with the iterative and collaborative nature of the Scrum framework. The A3 Problem Solving technique is a structured problem-solving and continuous-improvement approach, and while it originates from the Toyota Production System, it can be integrated into any process that requires structured problem-solving, including Scrum.

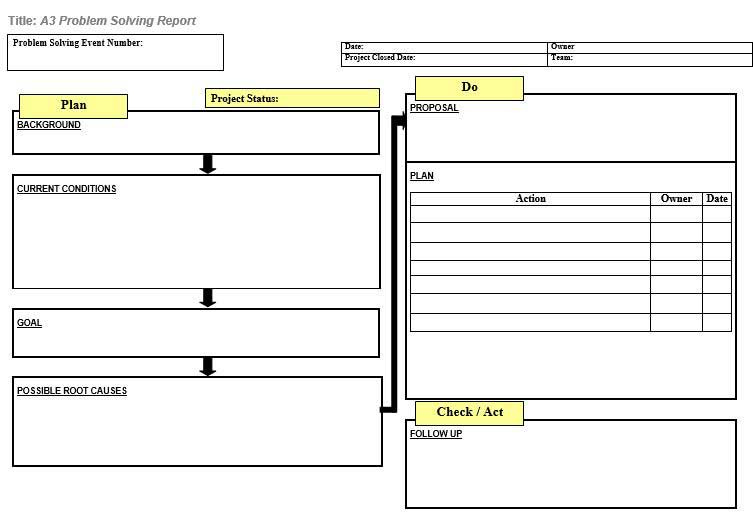

What is A3 Problem Solving?

A3 refers to the international paper size (11x17 inches) on which the technique was traditionally documented.

The A3 problem-solving process is a concise, visual representation of the current problem, analysis, countermeasures, and action plans required to improve the situation.

Picture this:

You've got a problem. Instead of panicking, you grab a single A3-sized paper (yeah, that's 11x17 inches, but who's measuring?) or Google Docs or any other file management app and jot down everything,

the problem,

why it's happening,

what you're gonna do about it, and

how you'll make sure it doesn't come back to haunt you.

It's kind of inspired by the Plan-Do-Check-Act approach, so you're always in this loop, tweaking things as you go.

The real magic of A3 isn’t just about that piece of paper. It’s the way of thinking. It's about sitting down, having a real talk about the problem, and learning from it. It's like that old saying,

"Tell me and I forget, teach me and I may remember, involve me and I learn."

With A3, you're right in the thick of it, figuring things out.

Taiichi Ōno of Toyota loved reports that were short and sweet. And thanks to him, A3 became Toyota's secret sauce. Much like Value Stream Mapping and Kanban Boards.

Here's a breakdown of the typical steps in the A3 process:

Problem Statement: Clearly describe the problem.

Current Situation: Provide a visual and/or descriptive representation of the current situation. Use data if possible.

Goal Statement: Define the target or desired outcome.

Root Cause Analysis: Identify the root causes of the problem. Use tools like "5 Whys" or Fishbone (Ishikawa) diagrams.

Countermeasures: Propose solutions or actions to address the identified root causes.

Action Plan: Detail who will do what by when. This section includes action items, timelines, and responsibilities.

Follow-up: Define how to measure and review the effectiveness of the implemented countermeasures, including a plan for what to do if the problem isn't fully resolved.

Let’s apply this technique to the problem described in the question.

Solving the Problem at Hand

Let’s expand each component of the A3 technique and apply it to your problem.

1. Problem Statement:

To define the problem statement, collaborate with the entire team and answer the following questions.

Note: Discard the questions that don’t apply.

Let’s say the answers come out to be the following:

1. How often have disruptions occurred over the past 6 Sprints?

Disruptions have occurred consistently over the last 6 Sprints.

2. Which types of customer issues frequently cause these disruptions?

The disruptions are mainly due to urgent customer issues related to recent feature deployments.

3. What's our success rate in achieving Sprint goals amidst these disruptions?

We've had a 25% success rate in meeting our Sprint goals because of these disruptions.

4. How do these disruptions affect our team's priority setting and decision-making during Sprints?

Ambiguity in prioritizing between urgent issues and Sprint commitments impacts our delivery predictability.

By combining these answers, we can formulate the problem statement as follows:

"During the last 6 Sprints, our team has been consistently disrupted by urgent customer issues, leading to a 75% failure rate in meeting our Sprint goals. These issues often pertain to recent feature deployments. The ambiguity in prioritizing between these urgent issues and our Sprint commitments is hampering our delivery and predictability."

2. Current Situation:

For the problem that’s described in the question the following metrics can provide a quantitative and qualitative understanding of the "Current Situation".

Sprint Disruption Frequency: Number of times the team had to address urgent customer issues during each of the past six Sprints.

Issue Origin Breakdown: Percentage of disruptions attributed to specific features or product areas. This helps identify if certain areas are more prone to problems than others.

Sprint Completion Rate: Percentage of committed Sprint backlog items that were completed versus those that remained incomplete due to disruptions.

Response Time: Average time taken to address and resolve the urgent customer issues that caused disruptions.

Team Morale Metrics: Surveys or feedback sessions to gauge the team's sentiment regarding these disruptions.

Sprint Goal Achievement Trend: A graphical representation (e.g., release burndown chart) showing the trend of Sprint goals achieved versus missed over the past 6 Sprints.

Backlog Growth: Measurement of how the product backlog has grown due to incomplete Sprint items getting rolled over.

Priority Switching Instances: Number of times the team had to switch priorities mid-Sprint due to urgent issues.

Work Interruption Duration: Total hours lost due to addressing urgent issues, which could be depicted as a proportion of the entire Sprint duration.

Quality Metrics: Number of defects or bugs reported post-release for the features deployed in the last six Sprints.

These metrics provide a comprehensive view of the current situation and can be depicted through charts, graphs, or tables for easy visualization in the A3 report.

Note: It’s up to you and your team to decide which metrics are most relevant and impactful for your context.

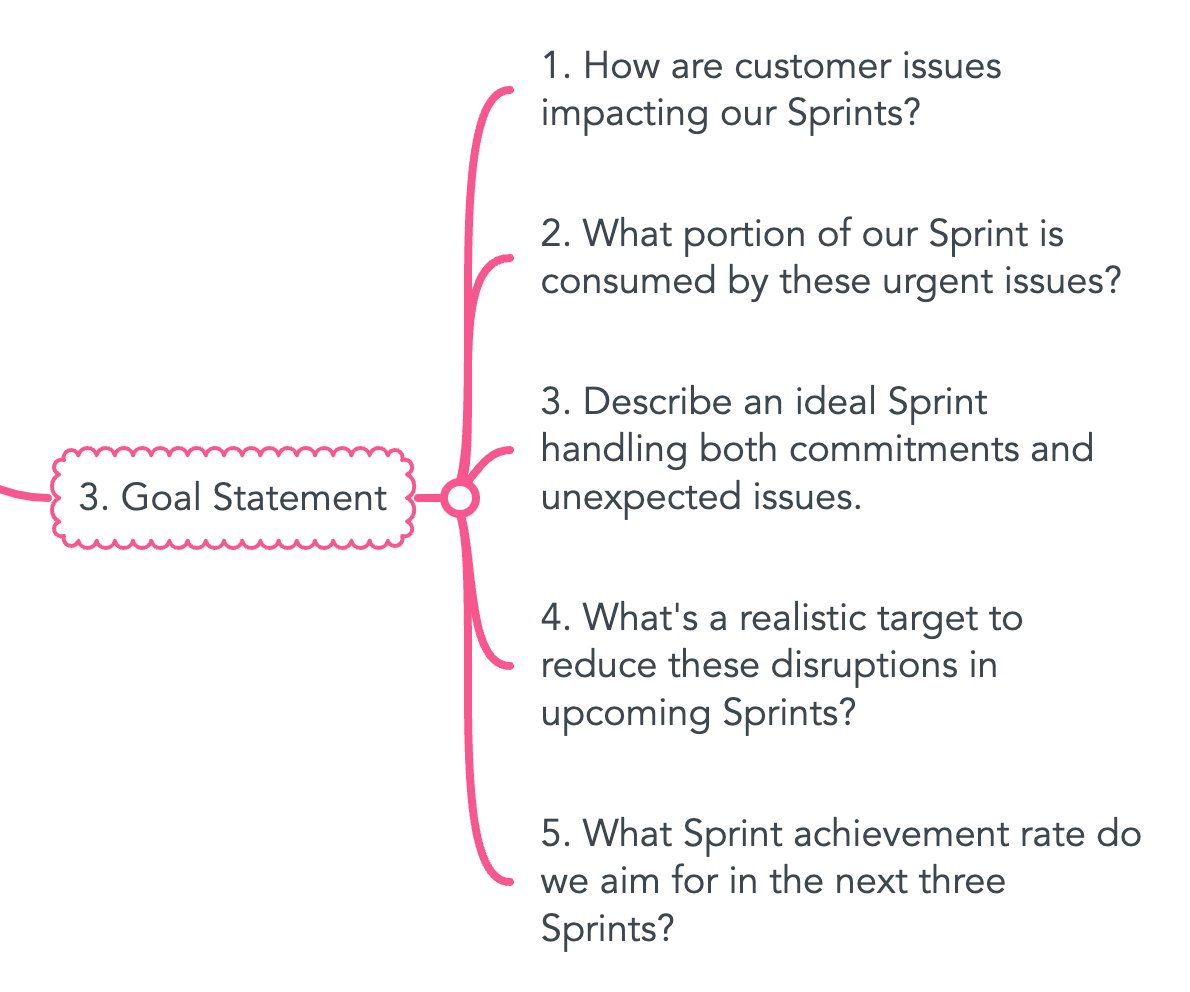

3. Goal Statement:

The goal statement answers the following question:

What specific improvements do we need to achieve?

To define the goal statement, ask your team the following questions:

Let’s say the answers come out to be the following:

1. How are customer issues impacting our Sprints?

They're causing us to frequently divert from our planned tasks, making it hard to fulfill Sprint commitments.

2. What portion of our Sprint is consumed by these urgent issues?

Approximately 30% of our Sprint time gets redirected to address these unexpected customer issues.

3. Describe an ideal Sprint, handling both regular commitments and unexpected issues.

An ideal Sprint would have a dedicated buffer time to manage unexpected issues without affecting our primary goals.

4. What's a realistic target to reduce these disruptions in upcoming Sprints?

We believe we can achieve a 50% reduction if we refine our process and get faster feedback on deployed features.

5. What Sprint achievement rate do we aim for in the next three Sprints?

We're aiming to achieve at least 85% of our Sprint goals over the next 5 Sprints.

By combining these answers, we can formulate the goal statement as follows:

Objective: Reduce Sprint disruptions caused by urgent customer issues.

Magnitude: Achieve a 50% reduction in said disruptions.

Performance Target: Increase our Sprint goal achievement rate to 85% or above.

Secondary Objective: Establish a clear prioritization process for:

Addressing urgent customer concerns.

Ensuring Sprint commitments are not compromised.

Timeline: Implement and see improvements over the next 5 Sprints.

4. Root Cause Analysis:

Use the “5 Whys” to get to the root cause:

Problem: During the Sprints, the team is consistently disrupted by urgent customer issues related to recent feature deployments, leading to a 75% failure rate in meeting Sprint goals.

Why are urgent customer issues consistently disrupting our Sprints?

Because customers are finding problems with the new features soon after they are deployed.

Why are customers discovering problems with the new features soon after deployment?

Because some features are not adequately tested before deployment.

Why are some features not adequately tested before deployment?

Due to time constraints in the Sprints, not all testing scenarios are being covered, and some are being rushed.

Why are there time constraints that prevent comprehensive testing?

The team has been trying to deliver too many features within a single Sprint, leading to limited time for thorough testing.

Why is the team trying to deliver too many features in a single Sprint?

The team's capacity and the expectations from stakeholders are misaligned. There is a lack of clear communication regarding what's feasible in a Sprint, leading to overcommitment.

At the end of this exercise, the root cause seems to be:

“Misalignment between the team's capacity and stakeholder expectations, leading to over-commitment and subsequently inadequate testing.”

Addressing this misalignment and ensuring that Sprints are planned with a realistic capacity in mind can help reduce urgent customer issues and disruptions.

5. Countermeasures:

Root Cause: A misalignment between the team's capacity and stakeholder expectations, leading to over-commitment and, subsequently, inadequate testing.

To define countermeasures or suggested ways forward, ask your team the following questions:

Capacity and Overcommitment

Are we clear on our capacity for each Sprint? If not, what's missing?

We often overestimate our capacity, thinking we can handle more than we actually can. We don't account for unplanned work or emergencies.

How do we currently decide what and how much to commit to? Is there a better way?

Currently, we commit based on a mixture of gut feeling and historical performance. We haven't been using metrics like velocity/capacity consistently.

Potential Countermeasures:

Implement a consistent method for tracking team velocity to better predict future capacity.

Factor in a buffer for unplanned work when committing to Sprint items.

Have regular reviews of the team's capacity and adjust commitments accordingly.

Stakeholder Expectations:

How effectively are we communicating our capacity and commitments to stakeholders?

We communicate our plans at the beginning of the Sprint, but not always the reasons behind our capacity decisions.

What feedback have we received from stakeholders regarding our delivery and commitments?

None.

How can we better manage or align stakeholder expectations with our realistic capacity?

Perhaps more transparency in our decision-making and regular updates on our progress might help.

Potential Countermeasures:

Organize mid-sprint check-ins with stakeholders to provide updates and address concerns.

Educate stakeholders on the iterative delivery and the importance of team capacity.

Implement a clear process for stakeholders to submit requests so they are included in the backlog and prioritized appropriately.

Testing and Quality Assurance:

How confident are we in our current testing protocols?

We're confident in some areas, but there have been instances where critical issues slipped through.

Are there gaps in our testing process that we might be missing?

Not known.

Would additional tools or training improve our testing quality and confidence?

Some team members have expressed interest in additional training on automated testing.

Are our acceptance criteria detailed enough to guide effective testing?

Yes.

Potential Countermeasures:

Invest in training sessions or workshops focused on advanced testing techniques.

Explore and implement additional testing tools or frameworks that can aid in catching critical issues.

Introduce peer reviews or pair testing sessions to ensure a higher level of scrutiny.

Note: Modify the questions to suit your specific circumstances.

Note: These are mere recommendations. You will turn these recommendations into action plans by presenting them to the team and stakeholders and obtaining their formal approval.

All the information that you have collected till now, from step 1 to step 5, is the report you require to get your stakeholder buy-ins. After this point, you work with the stakeholders to find a potential solution to the problem at hand.

A3 = Reporting + Problem Solving

6. Action Plan:

After you spot the main issues and causes and make a list of countermeasures, meet with your stakeholders along with your team. Explain the problems and your suggested solutions to ensure everyone understands and agrees.

For every agreed upon “countermeasure,” discuss and decide on an action plan, making sure everyone knows their part (including the stakeholders). Use the "4W and 1H" technique to clarify action items.

Let’s say the following countermeasures are agreed upon:

Implement a consistent method for tracking team velocity to predict future capacity.

Organize mid-sprint check-ins with stakeholders.

Invest in training sessions on advanced testing techniques.

Using the "4W and 1H" technique, the following action items were identified:

Implement a consistent method for tracking team velocity and capacity to predict future capacity.

Who: Scrum Master and Development Team.

What: Adopt and integrate a method to track velocity and team capacity into the current workflow.

Where: Integrated into the team's Agile software (like JIRA, Trello, etc.).

When: Beginning of the next Sprint.

How: Scrum Master introduces the tool/method during Sprint Planning, trains the team on its use, and oversees its application throughout Sprint.

Organize mid-sprint check-ins with stakeholders.

Who: Scrum Master, Product Owner, and Key Stakeholders.

What: Establish a recurring mid-Sprint review/check-in meeting.

Where: In a dedicated meeting room or a virtual platform if remote.

When: Midway through every Sprint.

How: Scrum Master sets up recurring meetings, the team prepares progress highlights, and time is allotted for feedback and questions.

Invest in training sessions on advanced testing techniques.

Who: Development Team and QA team, with potential external trainers.

What: Attend workshops or training sessions on improved testing methodologies.

Where: On-site in a training room or virtual webinars if remote.

When: Over the next two Sprints.

How: Scrum Master or Test Lead identifies relevant training sessions, schedules them for the team, and ensures that learnings are then integrated into the team's testing protocols.

Then, make sure your team has what they need to carry out the plan. This might be tools, training, or meeting spaces.

7. Follow Up:

In the same meeting, discuss the measures for success. You can initiate a discussion by asking the following questions:

The answers to these questions are crucial for the problem-solving to work. Work with the people in the room to find the answers.

Below are my suggestions for these questions:

1. How will you determine if the disruptions from customer issues have lessened and the team's sprint performance has improved?

My suggestion: You can track both the frequency of customer disruptions and sprint velocity to observe if there's a correlation. An increase in velocity alongside decreased disruptions indicates a narrowing performance gap. If not met, consider revisiting the countermeasures and refining them.

2. How will you validate that sprint goals are being achieved consistently without being overshadowed by urgent customer issues?

My suggestion: You can introduce a "disruption index" metric in your sprint reviews.

What is the disruption index?

It’s a metric that quantifies the impact of disruptions on the sprint's progress.

Here's a simple way to calculate the disruption index:

Quantifying Disruptions:

# of Disruptions (D): Track the number of times an unplanned customer issue disrupts the sprint.

Duration of Disruptions (T): Measure how much time the team spends addressing each disruption. Sum these durations for the entire sprint.

Quantifying Sprint Work:

# of Hours in the Sprint (H): This is the total number of hours the team would work during a sprint without any disruptions. It's simply the number of hours per day multiplied by the number of days in the sprint multiplied by the number of team members.

Calculating the Disruption Index:

Disruption Index (DI) = (D x T) / H

For example, let's assume:

During a 2-week sprint (10 days) with a team of 5 working 8 hours/day:

Total Work Hours (H) = 10 days x 5 team members x 8 hours/day = 400 hours

There were 10 disruptions, with a total duration of 20 hours:

D = 10

T = 20 hours

Using the formula,

Disruption Index (DI) = (10 disruptions x 20 hours) / 400 hours = 0.5 or 50%.

This means that 50% of the team's capacity was disrupted due to unplanned customer issues.

If goals are being missed in sprints with a high disruption index, it validates the concerns. Conversely, a reduction in the disruption index paired with met goals indicates SUCCESS!!!

Back to the questions!

3. What unforeseen challenges or secondary effects might arise from focusing on minimizing customer disruptions during sprints?

My suggestion: A potential side effect you can anticipate is the team becoming too risk-averse. Another is stakeholders feeling their urgent needs aren't addressed promptly. If these are observed, you might need to balance risk-taking and stakeholder communication.

4. What backup strategies or approaches are in place if the main countermeasures against customer disruptions don't yield the expected results?

My suggestion: You can consider buffer periods within sprints for addressing unplanned disruptions. Another option is setting up a dedicated team or subgroup to handle these disruptions. If these don't provide the desired outcome, a deeper dive into the nature of customer disruptions might be necessary.

5. Which processes or practices will you establish to ensure that the countermeasures remain effective and are consistently adopted in the long run?

My suggestion: You can integrate the countermeasures into the team's definition of done and working agreements.

Note: These are mere suggestions based on what I have experienced with my teams.

Final Thought

This last step is super important if we want to keep getting better. We have to see if what we did actually worked out the way we thought it would.

Regardless of whether the outcome is positive or negative, you do what needs to be done.

If the predicted results don't match the actual ones, adjust the plan, implement it again, and monitor progress. If a positive outcome is achieved, communicate the improvements to the entire organization and implement them as the new normal.

Further Resources:

This is it 🙏

If you have any questions (related to this topic), don’t forget to use the comments section to ask.

🙋🏻♂️ Your Questions!

If you want me to answer your questions in this newsletter, please send them my way using this link - Send me your questions.

If you’re finding this newsletter valuable, consider sharing it with friends or subscribing if you aren’t already.

Till next week!

Sincerely,

Vibhor 👋

P.S. Let me know what you think! Is this useful? What could be better? I promise you won’t hurt my feelings. This is an experiment, and I need feedback. You can reply to this email to send your message directly to my inbox.

“I share things I wish I knew in the starting years of my career in the corporate world."

Vibhor Chandel

This is very helpful. Thank you.