Applying Scrum to Data Analytics Team

Learn the thinking process behind applying Scrum to different domains

Welcome to the 🔥 free edition 🔥 of Winning Strategy: a newsletter focused on enhancing product, process, team, and career performance. This newsletter shares insights gained from real-world experiences that I gathered working at Twitter, Amazon, and now as an Executive Product Coach at one of North America's largest banks. If you’d like to become a paid member, see the benefits here, and feel free to use this expense template to ask your manager.

I think it is safe to imagine that most of us have worked on a Scrum team in the software development space.

We've witnessed how development teams break down features into user stories, plan sprints, and deliver working software incrementally. The framework seems to work seamlessly there – after all, it was born in software development.

But what happens when we step into the world of Data Analytics?

Can we simply lift and shift our Scrum practices from software development to data teams?

After doing some research, I found that the answer… isn't straightforward.

Data analytics teams operate in a different paradigm. Their work often involves experimental analysis. Unlike software development, where feature requirements are typically well-defined, data projects can evolve significantly as insights emerge.

So… what’s the way forward?

How do we answer questions like:

How do we define a "potentially shippable increment" in data analytics?

What constitutes a "user story" when the team is building predictive models?

How do we handle sprint planning?

Can we maintain the same sprint cadence when dealing with data uncertainties?

These questions highlight the need for thoughtful adaptation.

I have found that Scrum's pillars — transparency, inspection, and adaptation remain relevant. But, the way we implement them changes.

All this and more in the rest of the post.

Let’s get started.

If you’re new, here’s what you missed in the last few weeks

How does a Data Analytics team function?

Let's peek into their world...let’s see how it differs from our well-known “software development” world.

Unlike software teams that build features, data analytics teams solve puzzles.

Complex puzzles.

Their typical day? It varies... a lot.

Sometimes, they're diving deep into data cleaning – making sense of messy, incomplete, or inconsistent data.

Other times?

They're building complex models, testing hypotheses, or creating visualizations that tell a story

Their work typically falls into these patterns:

Exploratory Analysis

Going through data... finding patterns... discovering insights...

Sometimes they don't even know what they're looking for until they find it.Model Building

Creating predictive models... testing them... tweaking parameters...

And then starting over when the accuracy isn't quite there.Dashboard Creation

Taking complex data and making it simple... visual... actionable.

But wait... the stakeholder wants to see it differently.

Back to the drawing board.Ad-hoc Requests

"Can you quickly pull these numbers?"

"We need this analysis by tomorrow..."

They frequently get urgent requests that disrupt their planned work.

Their challenges?

Let’s just say they are a bit…unique.

Data Quality Issues

Missing data... incorrect data... outdated data...

The foundation of their work isn't always solid.Unclear Requirements

"We need insights about customer behaviour."

But what specific behaviour? For which customer segment? Over what time period?Timeline Uncertainties

How long will it take to clean this dataset?

Will this model ever reach acceptable accuracy?

Nobody knows... until they try.Stakeholder Dependencies

Waiting for data access...

Waiting for business context...

Waiting for requirement clarity...

Their Deliverables?

They're also not as clear-cut as a new feature or bug fix.

Sometimes it's:

A statistical model

A dashboard

An insight report

A recommendation

A dataset

A visualization

The real deliverable is often the insight itself.

The "aha moment" that helps the business make better decisions.

And those moments? They don't always arrive on a “sprint” schedule.

This is the world we're trying to fit into a Scrum framework.

Interesting challenge, isn't it?

But let me tell you… the smart, insightful individuals have actually made it work.

This is true Agility.

Let's see how they did it.

Spoiler alert: It wasn’t a straightforward journey.

Domain Overview Generator Tool

If you’re a Scrum Master, Product Owner, or Project Manager looking to switch domains (e.g., from software to data analytics or healthcare), you can use this tool I created to help with your transition.

It gives you just enough information to help you move to any domain. As a valued paid member of Winning Strategy, you can access the beta release of the Domain Overview Generator tool for FREE by clicking on the link below.

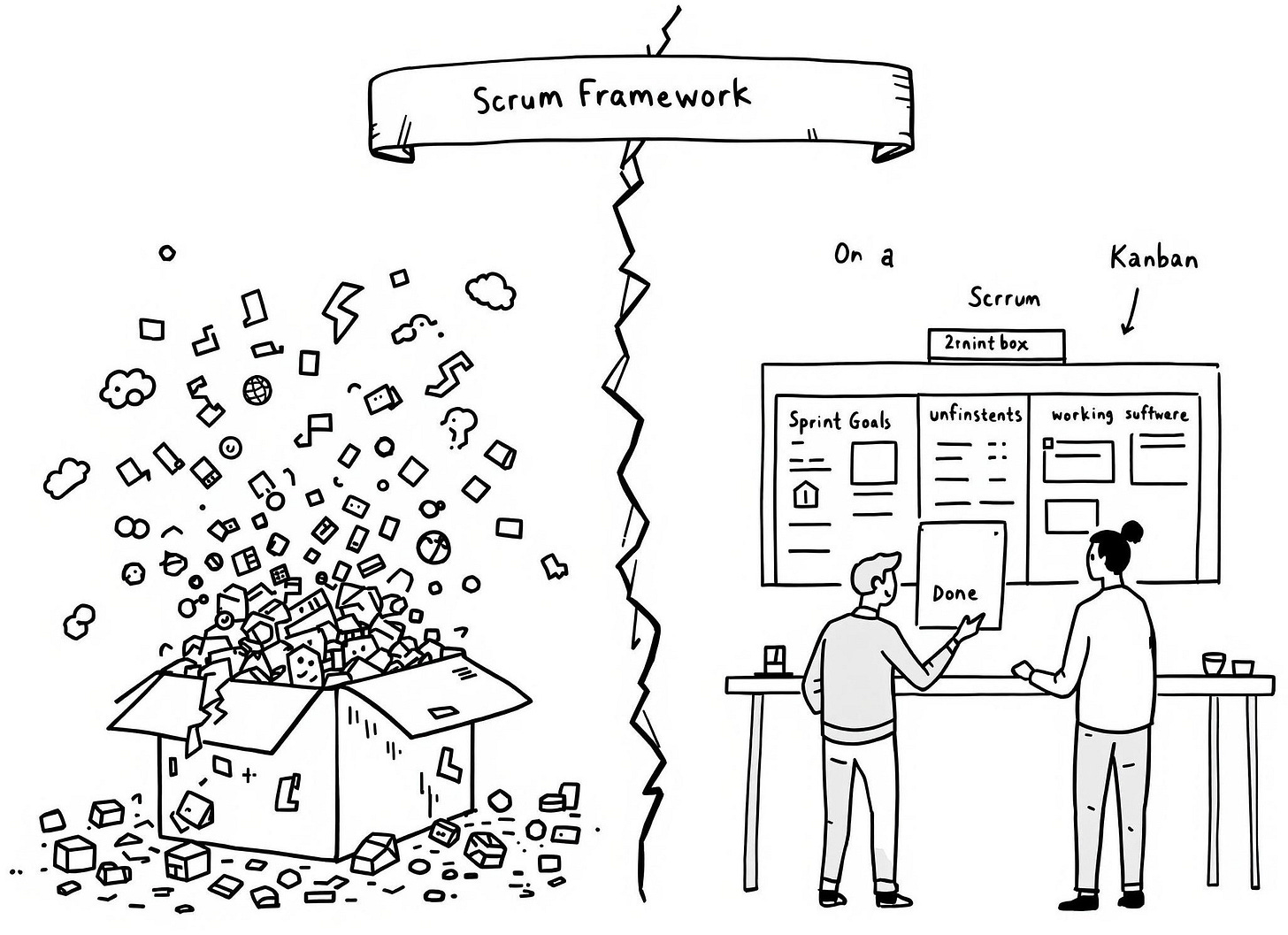

First, the data teams tried to apply Scrum

And, well... it didn't go as planned.

Many data teams started with enthusiasm, thinking..."Hey, if it works for software development, why not for us?"

But then…4-5 months in... there was disaster.

What went wrong?

They tried to fit their complex, unpredictable work into neat 2-week boxes.

And the boxes… they broke.

Here's what happened:

The Sprint Problems

The concept of fixed-length sprints clashed with the unpredictable nature of their work.

Data projects are full of surprises. Underperforming models or messy datasets can completely derail sprint plans.

Exploratory analysis and model tuning didn’t fit neatly into a sprint. At the end of sprints, there wasn’t always something tangible to show. No “potentially shippable increment.” Just half-formed insights and experiments waiting to be refined.

This left teams frustrated.

Sprint goals were missed.

Stakeholders were left wondering what was accomplished.

The Estimation Problems

Estimating data tasks was nearly impossible.

“How long will it take to clean this dataset?”

“When will the model reach acceptable accuracy?”

Nobody knew.

In a software project, it’s easier to break work into clear, measurable chunks. But in data analytics, progress isn’t linear. Insights emerge slowly, often after cycles of trial and error.

So…sprint planning became a guessing game.

And when estimates turned out wrong (which they often did), the team faced constant disruptions.

Deliverable Problems

Scrum’s focus on delivering working software didn’t translate well to data analytics.

What is a deliverable in the world of data?

A cleaned dataset?

A partially trained model?

An insight that still needs validation?

None of these felt like a “finished product.”

And stakeholders?

They didn’t always understand the value of incremental progress.

The result?

Teams felt pressure to rush their work, sacrificing quality for the sake of delivering “something” by the end of the sprint.

Specialization problems

Another challenge was forming cross-functional teams.

Data analytics requires specialists: data engineers, analysts, machine learning experts. But their skills often don’t overlap.

Instead of collaborating on the same tasks, they worked independently, creating silos within the team.

The Result

For many teams, trying to implement Scrum “as is” led to frustration and failure.

It became clear:

Scrum needed to change to fit the world of data analytics.

But how?

Next best option — Kanban

Then, they tried to apply Kanban

When Scrum didn’t work, they turned to something simpler.

Kanban.

No sprints. No rigid frameworks. Just a board and a flow.

At first, it seemed like the perfect solution.

The team could focus on their work without worrying about sprint deadlines. They could pick tasks off the board and get things done.

But over time, it happened again…

Problem #1: Lack of Structured Meetings

Kanban doesn’t define specific meetings.

Initially, the team loved this. No planning sessions. No retrospectives. Just heads-down work.

But soon, it became clear that something was missing.

Nobody was aligned on priorities

Bottlenecks went unnoticed for too long

Communication with stakeholders became inconsistent

Without regular touchpoints, the team lost clarity. Stakeholders were frustrated because they didn’t understand the value of what was being delivered—or if it was even worth delivering at all.

Problem #2: No Focus on Process Improvement

Kanban emphasizes limiting work in progress (WIP).

The team tried to reduce WIP where they could. But beyond that, there was no structured way to improve their process.

There were no retrospectives, no brainstorming sessions, and no time set aside to figure out what was working—or what wasn’t.

The team kept moving, but they weren’t getting better.

Problem #3: Stakeholder Confusion

The continuous flow of work created challenges for stakeholders.

There were no clear milestones or deadlines. Stakeholders didn’t know:

When to test completed work

When to provide feedback

When to expect deliverables

This lack of structure made communication harder. Coordinating with stakeholders or giving them a sense of progress was difficult.

The Result

Kanban felt more flexible than Scrum, but it wasn’t solving all their problems.

The team needed something in between.

A framework that provided structure—without being rigid.

So, they kept experimenting.

This time with a hybrid.

They tried Scrumban

At this point, the team had learned:

Scrum was too rigid. Kanban was too loose.

So they thought... "Why not take the best of both?"

Let’s try Scrumban.

The idea was simple:

Keep the helpful structure from Scrum

Add the flow from Kanban

Mix well

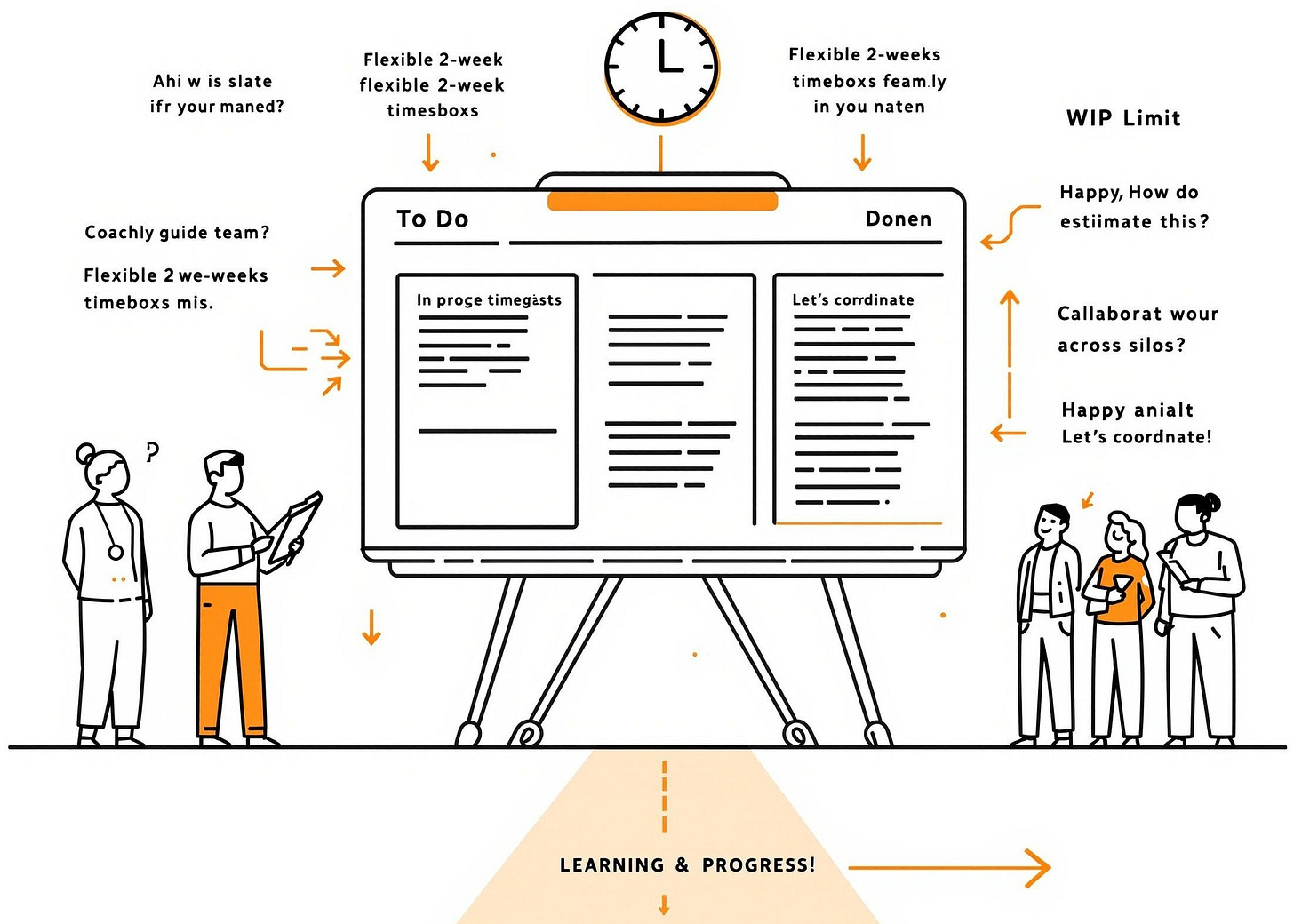

It combined the structure of Scrum with the flexibility of Kanban. And for a while, it seemed like they had found a sweet spot.

Here's what they tried:

They kept the 2-week timeboxes from Scrum

(But made them more flexible)They used Kanban boards for visualization

(Because seeing the work helps manage it)They set WIP limits

(To stop drowning in too many parallel tasks)

Some interesting things started happening...

Teams began sharing Product Owners

(This gave them some coordination!)Cross-team collaboration improved

(Because data people need to talk to other data people)They could actually show something to stakeholders

It seemed promising...

But...

There's always a 'but,'’ …

Scrumban wasn’t perfect too.

Coaching Investment: The hybrid model required significant coaching to get everyone on the same page. Teams needed guidance from “experts” who had experience in such situations

Estimation Struggles: While the process improved, estimating exploratory work remained a challenge. Some tasks still took longer than expected, disrupting the flow.

Problems. Problems. Problems…

But something was different this time...

The team was getting closer to what they needed.

They weren't quite there yet...But they were learning.

And that learning led them to their next discovery...

Finally, they created a new process — Data Driven Scrum

After their experience with Scrum, Kanban, and Scrumban, the data teams realized they needed something truly “tailored” to their work.

Something designed for data analytics.

That’s when they discovered Data Driven Scrum.

A framework built specifically for data teams by people who understood data science.

Here's what made DDS, as they usually like to call it, different.

The Core Idea:

Instead of forcing fixed time boxes, DDS focused on “experiments.” Each iteration was about answering a specific question.

Three key principles:

The DDS framework introduced three key concepts to ensure agility and productivity:

Iterative Experimentation and Adaptation:

Each iteration was focused on completing a full cycle of experimentation -starting with an idea, building and implementing it, observing the outcomes, and analyzing the results. This allowed the team to test hypotheses and quickly adapt based on findings.Natural Endings:

Unlike “time-boxed sprints” in traditional Scrum, DDS iterations were capability-driven. Meaning an iteration ended when the defined experiment was completed, not after a predetermined time period. This gave the team the flexibility to handle tasks with uncertain durationsCollective Analysis:

Observation and analysis were core to DDS. Unlike traditional Scrum, where analysis is usually outside the codified process, DDS made it a team effort.

DDS Workflow:

Brainstorm and Prioritize:

The team brainstormed experiments to answer questions (e.g. "Does age impact customer satisfaction?"). These were prioritized on a backlog based on value, effort, and probability of successDefine Experiments:

Each backlog item was broken into tasks using the Item Breakdown Board, with at least one task for creating, observing, and analyzing. For example, the age-satisfaction experiment included tasks for data preparation, calculation of metrics, and geographic analysisTrack Progress:

Tasks were visualized on a Task Board with columns such as “To Do,” “In Progress,” “Validate,” “Observe,” and “Analyze.” WIP limits ensured that tasks moved smoothly across the boardDaily Meetings:

Like daily scrum, the team met daily to review progress, resolve roadblocks, and ensure alignmentIteration Reviews:

Once an iteration was complete, the team reviewed outcomes with stakeholders, shared findings, and prioritized new backlog items based on insights gainedContinuous Improvement:

”Monthly” retrospectives were held to refine processes and identify actionable improvements

The Team Structure:

Product Owner

Process Expert (the Scrum Master)

Team Members (3-9 data professionals)

Metrics:

To measure their success, the team tracked two key metrics:

Top Item Time: The percentage of time spent on the top two priorities, ensuring focus on the most valuable tasks

Cycle Iteration Time: How long iterations really took

It wasn't perfect...But it was built for them.

And that made all the difference.

Here’s a detailed comparison between the processes:

You can learn more about DDS here.

The Journey Forward

The data team’s journey highlights an essential truth: no single framework fits every situation perfectly.

Scrum, Kanban, and Scrumban each offered valuable lessons, but the real breakthrough came when the team “tailored” their process to fit their unique needs.

This adaptability is the key to success in any project.

Frameworks like Scrum provide a solid foundation, but they must be treated as starting points — not rigid rules. By experimenting and welcoming change, teams can build a process that works for them rather than forcing their work into a predefined mold.

Whether it’s:

adjusting timeboxes,

redefining roles, or

creating new workflows

the best teams recognize that Agility means adapting the process to the work —not the other way around.

start with a framework that fits most of your needs

experiment and observe,

adapt,

then let the process evolve to suit your team’s goals and challenges

The true power of Agile lies in its flexibility and the ability to tailor it to thrive in any situation.

The best processes are not pre-defined. They’re CREATED.

Research

https://research.ou.nl/ws/portalfiles/portal/45243491/09140255.pdf

https://mode.com/blog/what-is-agile-analytics/

https://www.scrum.org/forum/scrum-forum/82025/scrum-data-analytics-projects

https://www.datascience-pm.com/wp-content/uploads/2024/12/Achieving-Agile-Data-Science-Case-Study-2025.pdf

https://www.lonti.com/blog/agile-data-management-a-comprehensive-guide

https://towardsdatascience.com/agile-data-science-data-science-can-and-should-be-agile-c719a511b868

https://iianalytics.com/community/blog/dont-make-data-scientists-do-scrum

https://towardsdatascience.com/how-to-make-agile-actually-work-for-analytics-e8fb2290276e

https://www.researchgate.net/publication/385980314_A_case_study_on_Channel_4_Navigating_Big_Data_Analytics_with_scrum_methodology_in_the_broadcasting_industry

https://atlan.com/agile-data-governance-model/

Further reading

Connect With Me

Winning Strategy provides insights from my experiences at Twitter, Amazon, and my current role as an Executive Product Coach at one of North America's largest banks.

Very insightful. Thank you Vibhor. I will definitely keep these references in mind when implementing scrum in my data science team!

Great insight! I’ve seen data teams struggle when trying to force-fit software Scrum practices into analytics. Nice share Vibhor.